LLMs are deep-learning AI algorithms that can recognise, summarise, translate, predict and generate content using very large data sets.

While the arrival of ChatGPT has spurred a frenzy among Chinese tech companies to develop domestic rivals, from Baidu’s Ernie Bot to Alibaba Group Holding’s LLM Tongyi Qianwen, the field is still led by US companies, with OpenAI subsequently releasing GPT-4 Turbo and US giant Google making its mark with Bard. Alibaba owns the South China Morning Post.

‘Like water and electricity’: China calls for ‘unified’ computing network

‘Like water and electricity’: China calls for ‘unified’ computing network

The country’s crowded market of more than 100 LLMs is constrained by lack of access to advanced chips, strict regulation, censorship of sensitive topics, high development costs and fragmented markets for the technology.

“China faces multiple challenges in developing LLMs as technology gaps widen with the West due to the advent of GPT and Google’s Gemini,” said Su Lian Jye, a chief analyst with research company Omdia. “Limits on computing power due to chip bans, and the limited quality of data from the Mandarin-based internet compared to the English-speaking world, can also be setbacks.”

Some say China’s accelerated AI efforts were spurred in 2017 when AlphaGo, a computer program from Alphabet’s DeepMind Technologies, beat Ke Jie, then the world’s top player of Go – also known as weiqi – 3-0. Go has been played in China since the ancient Zhou dynasty. Now the country is once again up against the clock.

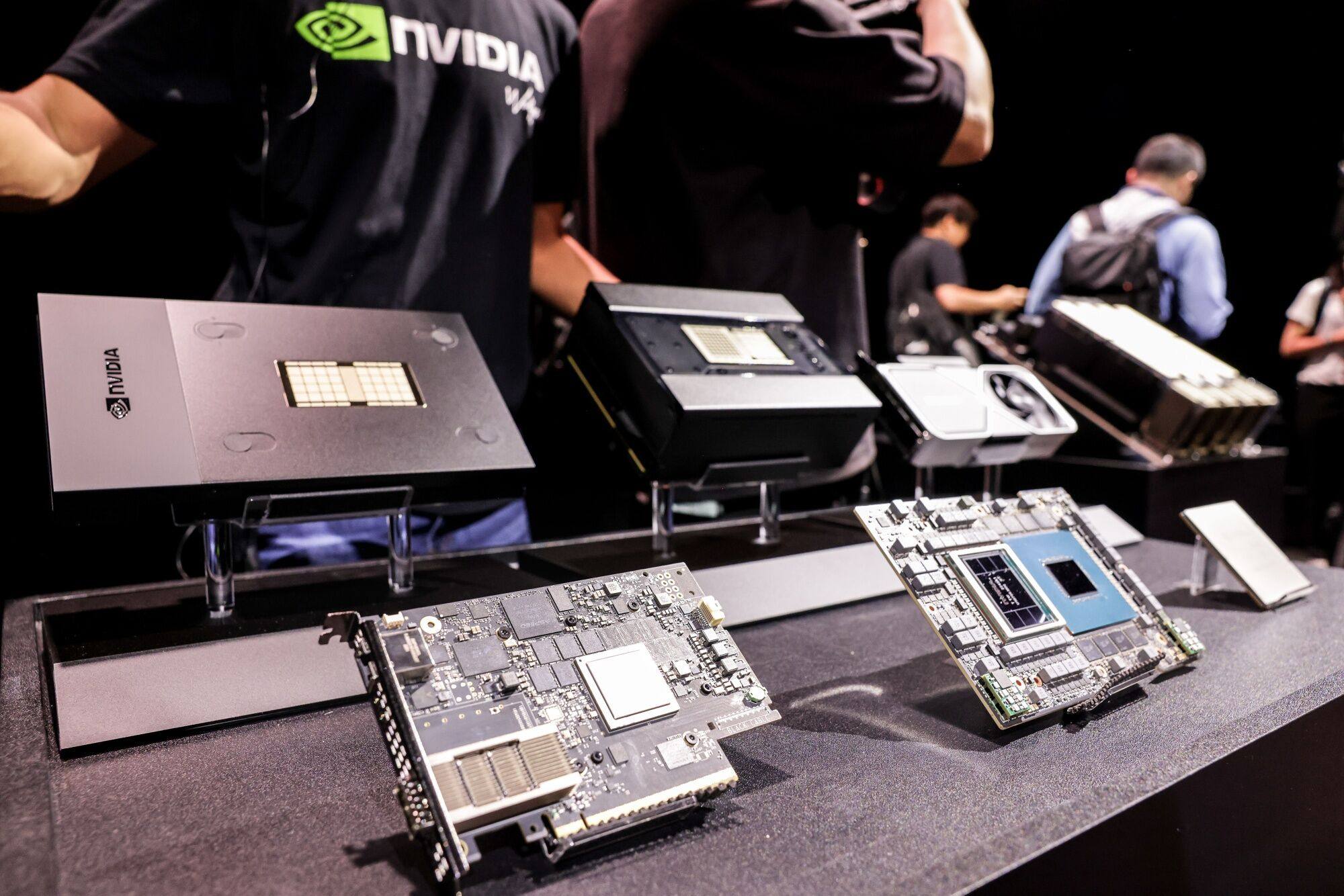

The biggest challenge is the country’s lack of access to cutting-edge graphics processing units (GPUs) from the likes of Nvidia due to US trade sanctions.

These GPUs, including Nvidia’s H100 priced at around US$30,000 per unit, are considered the beating heart of the latest LLMs, determining to a large extent how powerful the model can be and which Microsoft, Meta Platforms, Google, Amazon and Oracle have all heavily invested in.

Beijing court rules AI-generated content covered by copyright, eschews US stand

Beijing court rules AI-generated content covered by copyright, eschews US stand

However, deep-pocketed Chinese tech firms face buying curbs. A month before OpenAI launched GPT, the US government updated its export control rules to block China’s access to advanced chips such as Nvidia’s H100 and A100 on national security grounds.

China faces multiple challenges in developing LLMs as technology gaps widen with the West due to the advent of GPT and Google’s Gemini

California-based Nvidia has bent over backwards to alleviate the situation, tailoring slower variants of these chips – the A800 and H800 – for Chinese clients, providing a window of opportunity for the likes of Tencent Holdings and ByteDance to stock up. Mainland China and Hong Kong are responsible for just over 22 per cent of Nvidia’s revenue, with only Taiwan and the US generating more.

However, the US government in October moved to tighten controls again, making it illegal for Nvidia to sell the A800 and H800 to China and threatened to ban any future workaround.

Speaking at the Reagan National Defense Forum in California earlier this month, US Commerce Secretary Gina Raimondo said: “If you redesign a chip around a particular cut line that enables them [China] to do AI, I’m going to control it the very next day.”

Wang Shuyi, a professor who focuses on AI and machine learning at Tianjin Normal University, said inadequate computing power is one of the primary obstacles to AI model development in China.

“It will be increasingly hard for China to access advanced chips,” Wang said. “Even for Chinese companies with money, without computing power they will not be able to fully utilise high-quality data sources.”

And with domestic firms still well behind the cutting edge when it comes to chip production, China will find it hard to overcome these restrictions any time soon.

Video gaming and social media giant Tencent also warned last month that US restrictions on advanced chip sales to China would eventually impact its cloud services, even though it has a large stockpile of Nvidia AI chips.

“Those most affected are big tech companies that placed orders [for chips] worth billions of dollars that can’t be delivered, this will impact their ability to provide services through their clouds in the future,” said Chen Yu, partner at venture capital firm Yunqi Partners, which has backed AI start-up MiniMax.

Baidu, Alibaba, Tencent and ByteDance were expected to buy 125,000 units of Nvidia H100 GPUs – 20 per cent of all H100 shipments in 2023 – according to data from Omdia.

However, Chen pointed out that Chinese start-ups have some protection as they can tap into the computing capabilities of global public cloud services, which are not restricted.

Huawei to offer cloud, AI tech to China’s top copper pipe exporter

Huawei to offer cloud, AI tech to China’s top copper pipe exporter

Sanctions have hit hard though. As US companies from OpenAI to Nvidia have grabbed headlines for their roles in bringing generative AI to the world, some of China’s brightest AI stars from a few years ago, such as Hong Kong-listed SenseTime, have struggled to strike a profit under US restrictions.

“A few companies, including iFlyTek, are working with Huawei Technologies on workarounds to break through chip limits, but their efforts are still hampered by the lack of a mature AI training ecosystem, such as Nvidia’s CUDA,” said a Hangzhou-based AI entrepreneur who declined to be named due to the sensitivity of the matter.

Meanwhile, foreign LLMs such as OpenAI’s GPT series, Google’s newly-released Gemini as well as its AI chatbot Bard, are still not directly available in China due to strict regulatory requirements.

The Cyberspace Administration of China, the agency that polices China’s internet, implemented powerful AI regulations – effective August 15 – that require reviews of LLMs and oversight of their development, including both data inputs and outputs. Baidu, for example, had to run Ernie Bot in “trial mode” for months before it was approved for public use.

The structural language differences between English and Mandarin, and the sensitivity around political issues – domestic AI chatbots swerve answering delicate questions in user tests – also means that there is a strict divide between the domestic and global markets.

For example, Google’s Bard is available in “230 countries and territories” and only absent in a few places such as mainland China, Hong Kong, Iran and North Korea, according to company information.

China also risks duplicating efforts. As of July this year, 130 LLMs had been released by Chinese companies and research institutes, leading to what has been termed a “tussle of a hundred large models”.

Robin Li Yanhong, co-founder and chief executive of search engine giant Baidu, said last month that the launch of multiple, competing LLMs in China was “a huge waste of resources” and that companies should focus more on applications.

“No platform has emerged as predominant in terms of technology or market size currently,” said Luo Yuchen, CEO of Shenzhen Yantu Intelligence and Innovation. However, Luo added that model development should continue as even GPT-4 is probably not good enough to help companies solve day-to-day tasks handled by humans.

Baidu sees limited short-term impact from US AI chip curbs

Baidu sees limited short-term impact from US AI chip curbs

Wang Xiaochuan, CEO of AI start-up Baichuan, told a Tencent tech forum last week in Beijing that many firms should refrain from training their own models and “focus their efforts instead on finding commerciality and scalable AI products by tapping into existing models via cloud services”.

In the meantime, Baidu and Alibaba appear to have mapped out different pathways to AI growth.

Alibaba Cloud has open-sourced its Qwen-72B AI model for research and commercial purposes on machine learning platform Hugging Face and its own open-source community ModelScope. This follows a move by Meta to open-source its Llama2 model in July.

Alibaba Cloud said an open-source community is crucial for the development of models and AI-based applications. It has made four variants of its proprietary LLM Tongyi Qianwen available to open-source communities since August.

In contrast, Baidu has said that its Ernie LLM will remain proprietary. Shen Dou, who heads Baidu’s AI Cloud Group, has indicated that open-sourcing foundational LLMs can be difficult due to “lack of effective feedback loops” and heavy upgrade costs.

AI is certainly an expensive business. Building and training LLMs requires thousands of advanced chips to process huge amounts of data, not including the wages of highly-trained engineers.

For instance, Meta’s Llama model used 2,048 Nvidia A100 GPUs to train on 1.4 trillion tokens, a numerical representation of words, and took about 21 days, according to a CNBC report in March.

To be sure, while it is clear that China has a tough fight on its hands when it comes to sourcing advanced computing power and equipment – it does have access to vast treasure troves of data and a huge array of market applications – the two advantages highlighted by President Xi five years ago. It also has a multitude of resourceful entrepreneurs.

For example, Lee Kai-fu, a prominent venture capitalist and former president of Google China, has transformed his AI start-up 01.AI into a US$1 billion venture within just eight months despite some controversy over its use of the structure from Meta’s Llama LLM.

And China’s Big Tech firms aim to monetise the technology in one form or another.

“Open or closed, AI is essentially a booster for a cloud business in the eyes of cloud service providers,” said Omdia’s Su. “These providers, whether it’s Tencent or Huawei, eventually intend to make money from renting out computing and storage capabilities to AI developers on their platforms.”

Additional reporting by Ben Jiang