Updated at 8:41 p.m. ET on February 3, 2024

“I am crying,” my editor said when I connected with her via FaceTime on my Apple Vision Pro. “You look like a computer man.”

What made her choke with laughter was my “persona,” the digital avatar that the device had generated when I had pointed its curved, glass front at my face during setup. I couldn’t see the me that she saw, but apparently it was uncanny. You look handsome and refined, she told me, but also fake.

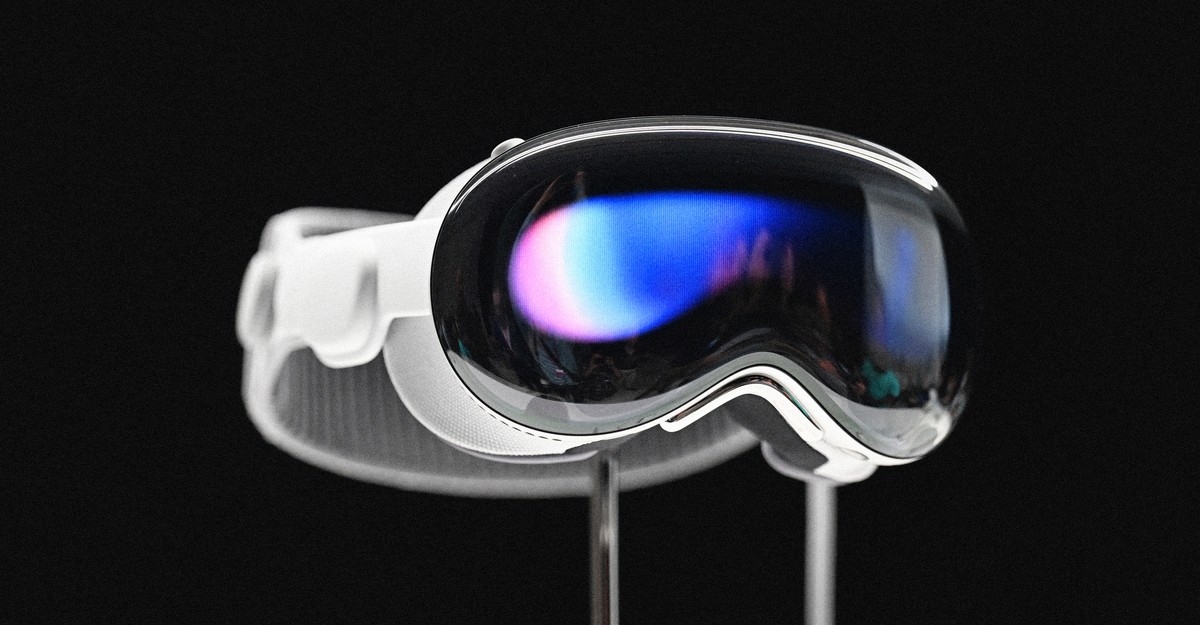

I’d picked up my new face computer hours earlier at the local mall, full of hope for what it would represent. The headset, which weighs as much as a cauliflower and sells for $3,499 and up, is now—after eight months of hype since its announcement—finally available. The Apple Vision Pro offers two innovations in one: a virtual-reality (VR) headset with a higher resolution than most others on the market, and an array of augmented-reality (AR) cameras that allow a wearer to see ordinary computer applications floating in space, and to interact with them via hand gestures. To make the AR work, a knob on the top of the device can dial back your level of “immersion” in a simulated space and replace it with a live video feed of your surroundings, overlaid in real time with computer programs: web browsers, spreadsheets, photo viewers, Disney+. It’s this latter function that most distinguishes the device from other headsets—and from other machines in general. Computers help people work, live, and play, but they also separate us from the world. Apple Vision Pro strives to end that era and inaugurate a new one.

“Maybe if I act like a computer, I will look more normal,” I suggested. When I roboticized my diction, she seemed to think that it helped. I was joking, but then, in a way, I also wasn’t. I did feel like I’d been turned into a robot person of some kind. Is that what the creators of these goggles hoped for, or was it just what I expected? If the Apple Vision Pro wants to reconcile life outside the computer and life within it, the challenge might be insurmountable.

When I placed the Apple Vision Pro on my head and set it up to see the world around me, I found that I was looking out into my living room, across my couches, through the window onto the street, across the craggy winter treetops, and up into the overcast sky. The dual displays, one for each eye, are so sharp and update so quickly that you feel, at first, as though you’re looking at the world as you see it, not how it’s been reconstructed by a headset. At least you feel that way until a row of Apple-app icons materializes in the air in front of you.

The trickery is probably the reason I got physically ill inside the Apple Vision Pro. The round-cornered windows floating above my coffee table looked crisp, but I wasn’t looking only at them. Still feeling stuck in the technological past of earlier in the day, I kept looking down at my phone through the headset. In Apple Vision, the iPhone’s screen was legible but smeared, as if taken from a dream or an AI’s rendering. Checking email seemed impossible, or irritating at the very least; so did sending texts or using Slack. I took a headset screenshot of my view looking at the phone (and at my lap, and at my living room) and sent it to my friends. They egged me on: Get in the car! Go to the grocery store!

Please do not get in the car, or try to operate any other heavy machinery, while wearing the Apple Vision Pro. The device will convince you that you can see areas beyond it, but it renders that s…